Algorithmic Bus Stops

Description:

For my final project I designed workshop in reaction to companies using generative design to design their offices and the increasingly ubiquitous smart city technologies that incorporate algorithms into the materiality of the spaces we inhabit and navigate every day using the bus stop as a focus of study in order to critically engage with and discuss the implications of these new technologies.

Below are video demos of the AR component and photos of the workshop booklet:

Environment:

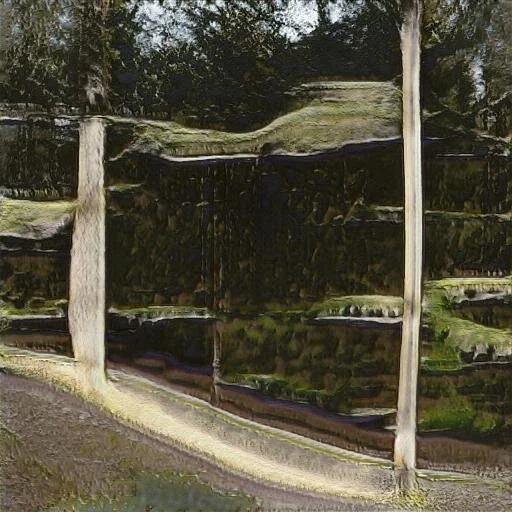

This project is building on my collections project in which I generated images of imagined bus stops using a styeGAN model in Runway. The process of training the model in Runway works by using transfer learning to train a model on top of existing ones. Within the interface, there are limited options: faces, cupcakes, flower, skyscrapers. I had previously used the bedroom model because I felt that the architecture was most similar to the bus stop photos. For this version of the project, I decided to push the design of these bus stops further into the Post Natural by training my models on top of the flower and forest models in addition. I also think that this sets up an interesting question for the workshop: the output all uses the same dataset, but the outcome looks so different - can we work together to identify exactly why? Where are we getting our design inspiration from and what materials are we using?

Artists and themes:

The methods I used in this project were inspired by The Chair Project by Philipp Schmitt. In terms of forms, I was inspired by the work of Veto Acconci (photos above), especially his designs of playgrounds and public spaces.

The goal of this project is to think together about questions of agency, ownership and groups that benefit in design processes. This and I hope to bring these questions out into the open with the AR element that brings these bus stops out from the digital screen into the physical world - participants can get close to them to inspect them, view them from different angles and step back to view it from a zoomed out perspective.

Technology/Site/Audience

The technologies I am using in the piece are the following:

First, I used a Chrome extension to download bus stop images from Google Images

Next, I used python to format the images [link]

Then I used RunwayML to train new models based on existing styleGAN models of bedrooms, flowers and forests [link]

I then scanned the output and downloaded my favorite images. From there I chose one from each of the three models I had trained.

I used Oculus Medium to re-imagine these images as a 3D model.

Finally, I used SparkAR to make a prototype AR app to view the 3D models on top of a metrocard (I initially tried Unity but ran into some technical problems with Vuforia)

The output is not site specific, although I would like to make it more so - in fact, the bus stop images are from all over the world and I .

The audience has no agency in the form of the work that was designed. and the interaction is in investigating the output and process by writing in a booklet I designed for the workshop. The goal of this is to recognize that this doesn’t happen effectively in public design space currently and a step towards thinking about new tools (some of which are used in this project) that could do this. Instead, through the activity and discussion prompts, the workshop attendees are encouraged to critically think about and discuss the technologies behind the design process.

Output from the three models. Top row: images generated by the bedroom based model Middle Row: output generated by the forest based model Bottom Row: Output generated by the flower model.

Open Questions/Answered Questions/Surprises

One of my questions was whether AR can be effective in this type of setting - I thought that I would have more answers after this project, but I think that I won’t really know until I run the workshop or do more user testing.

I was also curious as to whether the transfer from the GAN image to a would be successful in seeing the process behind and question the steps involved in . I think that they were successful in showing that the same data used with different methods and algorithms can have very different outcomes and tell different stories. I think that it also effectively asks the question of whether the final product was made by an algorithm or human designer and prompts thinking about new design processes.

I was surprised by how much the 3D model output felt changed from the over-utilized GAN aesthetic images - I think I’d like to try to continue pushing this forward in other work. I was also surprised by the difficulty of using Unity to create a simple AR app!

Future Steps

I received very helpful feedback that it could improve the structure of the workshop to focus it more on a specific issue or location. I’d like to do some research on what this could be, ideally something local. I would also like to run this workshop soon, perhaps at ITP’s Unconference in January. I think running it would be very to better understand what works about this as a workshop and what doesn’t. I would also like to refine the design of the workshop booklet and seek feedback on the questions before running the workshop.

Links

My final presentation can be found here.