For the past few months, I have been collecting photos of bus stops. This came from an idea to re-design the LinkNYC kiosks using machine learning (and specifically a GAN model as a design tool.

I was motivated by these structures because I always wondered why they had to be the same boring color and shape as the sidewalk - there are already enough gray and shiny rectangles in this city! There are a bunch in my neighborhood and people drag out crates to sit around them, charging their phones or browsing the internet. Why wasn’t a seat built into the design? Why weren’t they designed to be social? Could they be? These are meant to be internet hubs specifically to “bridge the digital divide,” but because they are outside, they are harder to access in the winter or when it is raining. Why weren’t they designed with this challenge in mind? And they are so uninviting that many (higher income) New Yorkers don’t even know what they are even though they have useful resources for everyone! And why is the portal for people to use a tiny ipad that you have to lean over to read but the advertisements are displayed on two enormous 50 inch screens? People made each of these design decisions - if we start using algorithms in design, how will these priorities be encoded?

However, but there aren’t enough of similar objects worldwide to create a dataset large enough for machine learning. I decided to focus on bus stops instead because they are another example of public service structures built for people (the term social infrastructure is also sometimes used) and they are much more common. I was also inspired by the soviet era bus stops that my friend recently showed me. Why can’t/shouldn’t bus stops look like this?

For this project I decided to formalize this collection and create an interface in which the ML generated bus stops can be explored.

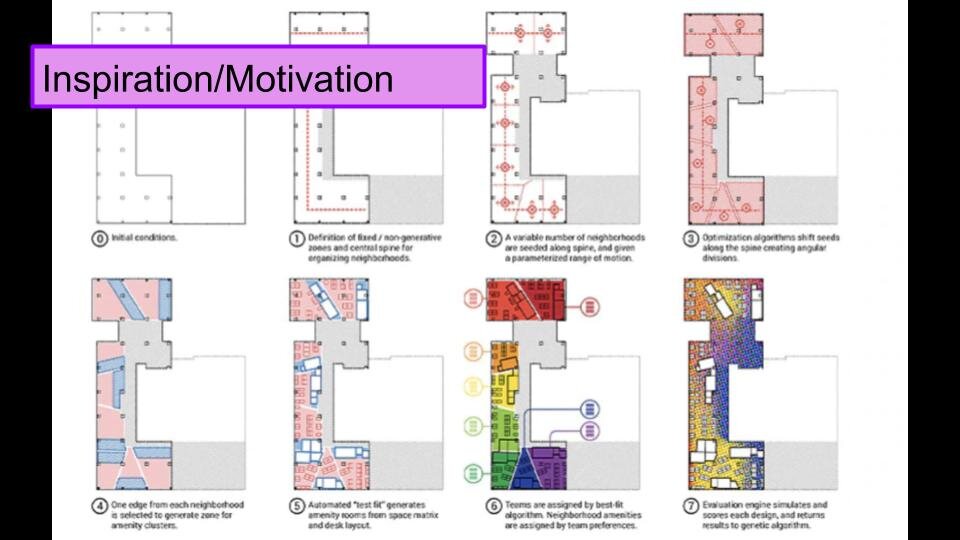

The process is inspired by a podcast I heard about theAutodesk officesbeing designed using machine learning.

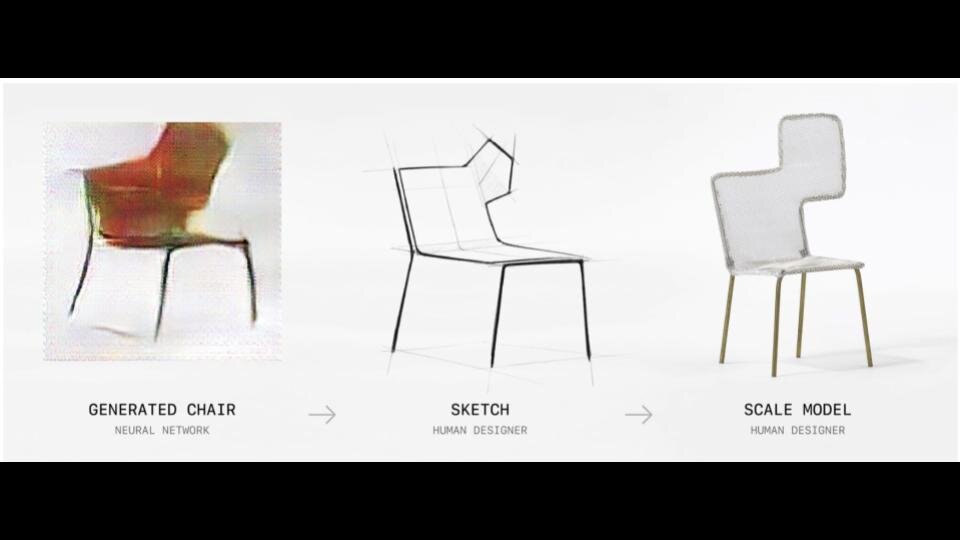

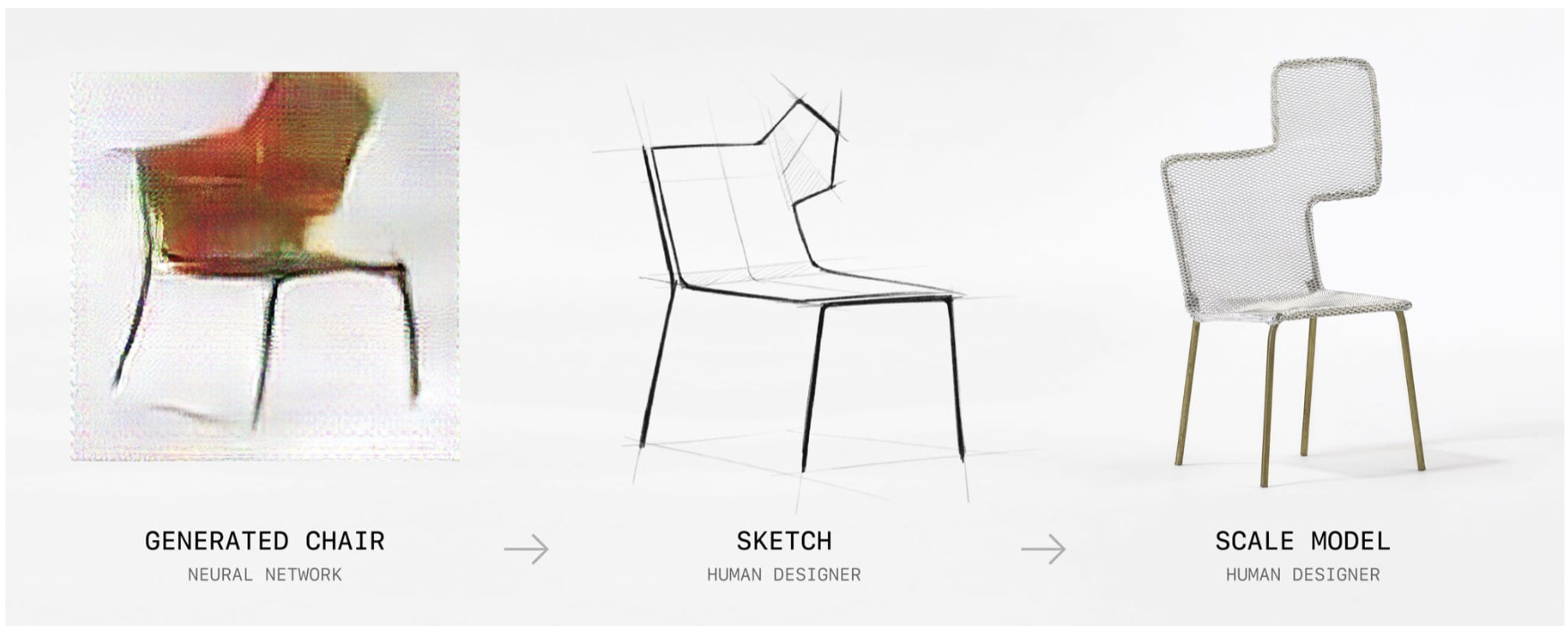

and the Chair Project by Phillip Schmitt at Parsons that used machine learning to generate new chair concepts.

I tried using the flickr scraper to find bus stop images, but decided to stick with a Google Chrome extension that gave me more curatorial control in the download process (rather than going through after the fact).

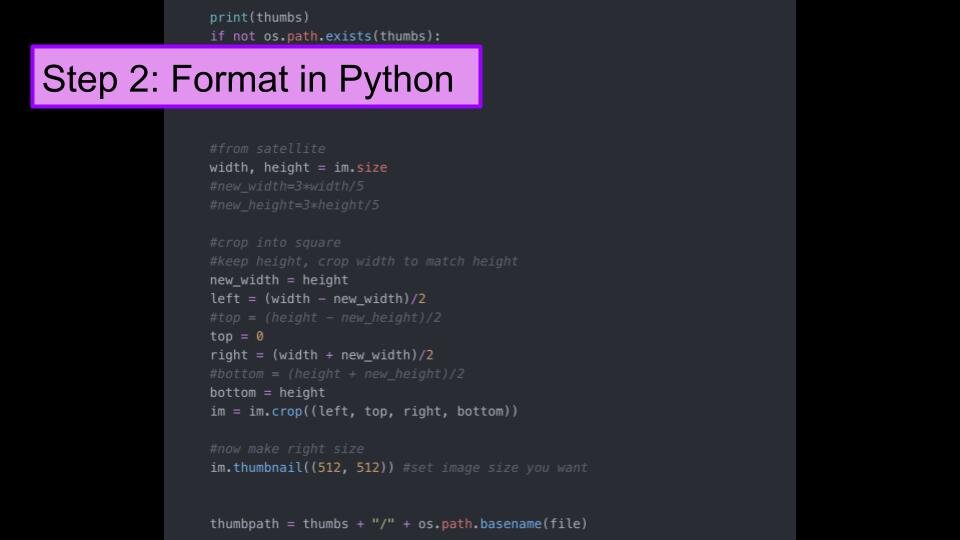

Then I ran the photos through Tega’s python script that I modified slightly to crop them to the center square area before resizing. I ended up with 692 processed images.

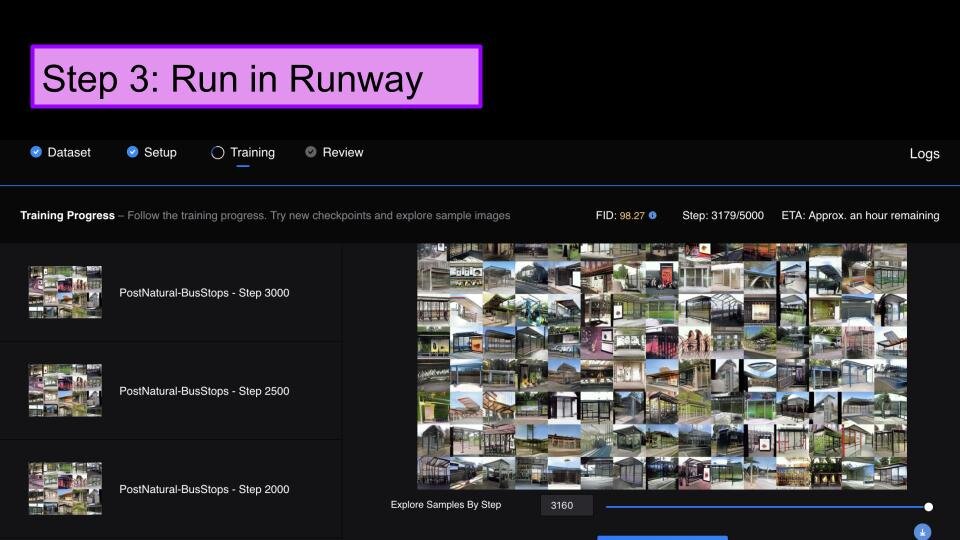

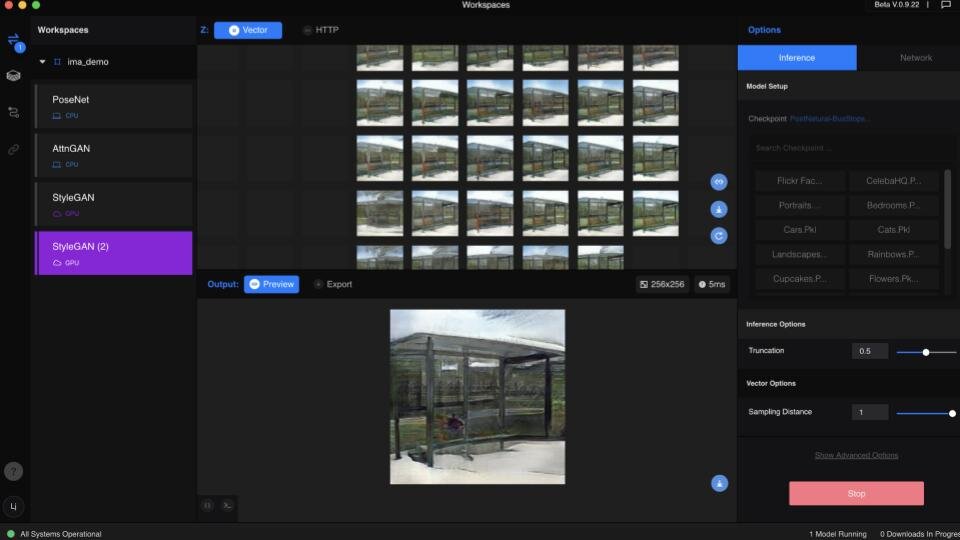

For the GAN training I used a beta feature in Runway (thanks to Ellen and the Runway team!). This feature allows you to use transfer learning to train on top of an existing model. I chose styleGAN and trained my bus stops on top of the bedroom model.

Runway makes this very easy to do. I uploaded the photos and then pressed “train.” It took 3 hours in total. I first tried this with a smaller subset of the dataset thinking it would be faster, but when I trained it with the full 692 photos it took the same amount of time. I did get a different result however (I didn’t include the Soviet bus stops in the first round, so they were much more standard-looking).

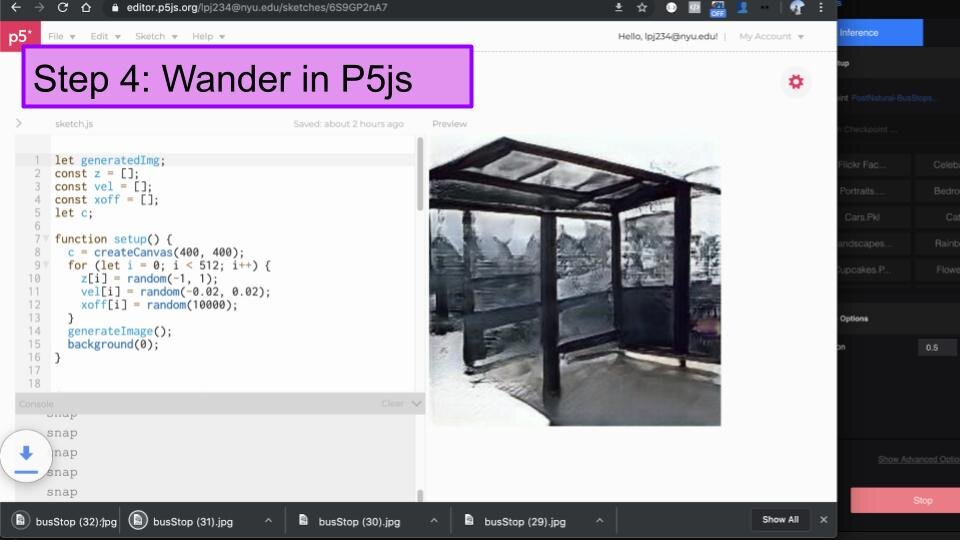

When I had the trained model, I used a p5 sketch Dan Shiffman wrote to connect to Runway and pull the resulting photos into the web browser and “walk” around in the latent space.

Here is the result:

The code also downloads the photos so that you can make an animation. I used these to make a simple website that lets you scroll through the latent space. I wanted the viewer to feel a bit lost and overwhelmed by the output and see this part of the result of the GAN process which is often hidden. It also introduces friction into the workflow (I’m hoping to use this for design, but it could be used in other cases) by forcing the human to manually go through the photos to find the ones that they want to use for whatever reason. The website doesn’t include all of the output, only approximately 300 photos.

The code also downloads the photos so that you can make an animation. I used these to make a simple website that lets you scroll through the latent space. I wanted the viewer to feel a bit lost and overwhelmed by the output and see this part of the result of the GAN process which is often hidden. It also introduces friction into the workflow (I’m hoping to use this for design, but it could be used in other cases) by forcing the human to manually go through the photos to find the ones that they want to use for whatever reason. The website doesn’t include all of the output, only approximately 300 photos.

I also like that this output is a collection of photos but has multiplied the size of the original collection by orders of magnitude and also obscures the original collection, leaving the viewer wondering and having to guess at what it was - and I hope more explicitly prompting questions about what is behind design decisions.

You can take a walk through this latent bus stop space here: https://lydiajessup.github.io/bus-stop-gan/index.html

Next Steps

I think that if I were to continue this project forward, I would need to be more intentional about fair use and copyright.

If I were to make another version of the website I’d like to introduce a bit more control for the use and provide some sort of indication of direction (which way they are going in the latent space - for example more straight lines or more curvy ones).

Another idea could be to train models separately on very different kinds of bus stops and compare the results in some way.

I am also thinking about a way to show the user the original dataset and allow them to look through that in a similar way.

I would also like to make 3D model versions of a few of the bus stops and show they in VR so that people can feel the scale of them and the effect the different design decisions have.

More

The code is for the website is here: https://github.com/lydiajessup/bus-stop-gan

The code for my version of Dan’s p5 sketch is here: https://editor.p5js.org/lpj234@nyu.edu/sketches/bKqfxxLn7

You can see my presentation slides below: